Have you ever caught yourself staring at a snippet of AI-generated code, wondering whether it’s worth a detailed review? The short answer: absolutely. Fast-forward to 2030, and code reviews will matter even more than they do today—despite (or maybe because of) AI’s growing presence in our development process.

But why? Let’s find out.

The Shifting Paradigm in Software Development

From “Code Writer” to “AI Supervisor”

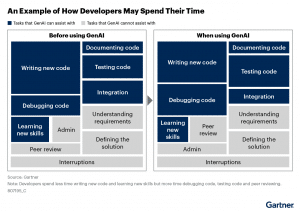

Not long ago, a developer’s day meant typing out loops, conditionals, and API calls. Today, many devs guide AI models—nudging them with prompts, refining whatever they produce. GitHub CEO Thomas Dohmke even predicted that Copilot could soon write 80% of developers’ code, a trend that feels more real every day.

But more AI-written code doesn’t mean less responsibility. You might be typing fewer lines manually, yet you’re still on the hook for correctness, security, and whether the generated code fits into your system’s architecture. In other words, developers are becoming part programmer, part “editor-in-chief” for the AI.

Why Code Reviews Still Matter

AI Isn’t Perfect—Especially Under Security Pressure

A recent study found that in repositories already burdened by security debt, AI-generated code introduced 72% more vulnerabilities compared to manually written code. If your codebase isn’t in pristine shape, an AI can unknowingly replicate those flaws. That’s a wake-up call for industries dealing with sensitive data—like FinTech or Healthcare. Your code might compile and pass a few tests, but still hide security landmines.

Oversight Builds Real Understanding

57% of junior developers admitted they couldn’t explain the AI-generated code they committed. That’s a big deal. Code without comprehension means technical debt waiting to happen—nobody can fix what they never understood. A lively code review process, AI-generated or not, forces devs to justify their choices, expose hidden assumptions, and spot potential pitfalls. That’s also how junior folks learn to think like senior engineers.

“Functional” Isn’t Always “Production-Ready”

Having code run on the first try is great, but it’s only the start. Production code must meet performance benchmarks, obey architecture standards, and keep readability in mind. AI tools might generate something correct but not necessarily optimal—or even comprehensible. Human reviewers close that gap, ensuring the code won’t cause headaches (or downtime) later.

Challenges of Code Reviews Today

Time-to-First-Review

The dream is to merge within hours. The reality? Pull requests can languish for days in a busy backlog. Meanwhile, your team stalls—leading to frustration, context switching, and slower product delivery.

Security Blind Spots

Even the best static analyzers can’t catch every flaw, especially if your code base is legacy or complex. Human reviewers excel at spotting inconsistencies the AI might miss—like an outdated authentication approach or a newly introduced PII exposure. And in a world where AI can replicate existing insecure patterns, humans are the last line of defense.

Junior Reviewers Feeling Adrift

When new devs rely heavily on AI, they’re less likely to develop the instincts to identify deeper logic or structural issues. That’s why code reviews are a perfect coaching vehicle. But if the review process doesn’t bake in education—if it’s just the senior devs firing off automated “LGTM” approvals—nobody grows.

AI-Powered Code Reviews: A Glimpse

AI code generation has grabbed headlines, but AI-driven code reviews are following close behind. We’re starting to see automated checks that flag syntax errors, style issues, or security concerns the moment a pull request hits GitHub.

- Instant Feedback: AI reviewers can pounce on your PR right away, highlighting obvious mistakes so you don’t have to wait for a human to dig in.

- Knowledge Base: These tools can be trained on your internal best practices—everything from naming conventions to compliance rules—so the feedback is relevant to your team.

- Shoring Up the Gaps: With routine checks handled by AI, human reviewers can focus on the big questions: Does this approach scale? Is it logically consistent with the rest of the system?

Example: Kodus

One platform embracing this model is Kodus. It slots into your Git workflow and analyzes each pull request for potential issues, often suggesting fixes in real time. Rather than just flagging generic security or style pitfalls, Kodus can learn your team’s conventions and deliver insights that cut through the noise. Think of it as a co-reviewer who doesn’t sleep and never loses focus.

Of course, no AI is perfect. Even the best models can produce false positives or overlook critical edge cases. Ultimately, engineers still call the shots. But these tools bring us closer to bridging the gap between swift code generation and truly production-ready software.

The Future of Code Reviews in 2030

1. Continuous, In-Editor Review

By 2030, we’ll likely see real-time AI feedback integrated into every major IDE. Think of it as a never-ending code review assistant, flagging issues as you type. The old “submit PR, wait, fix, repeat” cycle shrinks dramatically.

2. Humans as the Strategic Gatekeepers

With the routine stuff handled by AI, human reviewers will focus on system design, data flows, and risk management. Code review meets architectural review. The deeper conceptual feedback—“Can we solve this with fewer microservices?”—will occupy humans, while the AI polices style rules.

3. Higher Standards by Default

As AI gets better at catching bugs, the bar for what’s considered “reviewed” code will rise. You won’t have an excuse for shipping slow or insecure endpoints. But that also means product cycles accelerate. Review time compresses. Teams can safely ship more often.

4. New Pitfalls

We’ll face novel challenges:

- Complacency: Will devs become “lazy” reviewers, assuming AI has it covered?

- Attribution of Failure: If AI-approved code flops in production, who’s at fault?

- Maintaining AI: Constantly updating your AI models with correct examples and removing flawed patterns is itself a new dev-ops category.

Final Thoughts

The rise of AI-generated code doesn’t reduce the need for code reviews—it amplifies it. Tools can churn out lines at lightning speed, but those lines still need human insight. This matters even more for teams carrying security debt or with less-experienced developers who might treat AI suggestions as gospel.

The real win is balance: AI automates repetitive checks, freeing humans to tackle the meatier questions of architecture, performance, and design intent. By 2030, code reviews won’t just be about catching bugs—they’ll be your best opportunity to level up your team, refine your product vision, and stay ahead of ever-shifting technical challenges.

So if you’re wondering how code reviews will look in 2030, the answer is: more essential, more collaborative, and more powered by AI than ever before—yet still firmly guided by human expertise.