How to measure DORA Metrics

As AI coding assistants become part of every developer’s default toolkit, many engineering teams have started producing a lot more code. Pull requests pile up and CI pipelines run all the time. At first glance, this looks like a huge productivity leap. The problem is that this speed increase often hides new bottlenecks. Without a clear way to measure software delivery performance, it’s hard to know whether the team is actually shipping faster or just generating more rework. That’s where DORA metrics help bring clarity—as long as they’re used to understand the system, not just to track isolated numbers.

The challenge isn’t knowing the four metrics, but using them consistently and knowing how to interpret them in a scenario where the way code gets written is changing fast. Isolated numbers on a dashboard don’t create improvement if they don’t reflect the full development cycle or if teams are measuring the same concepts in different ways.

What exactly are we measuring

For DORA to generate any real value, it’s essential that everyone is aligned on what’s being measured. Vague definitions end up producing inconsistent data, which gets in the way more than it helps. In many cases, it’s better to measure nothing than to base decisions on numbers that each team interprets differently.

This guide is exactly about that: how to measure DORA Metrics the right way and use each one as a lever to improve delivery.

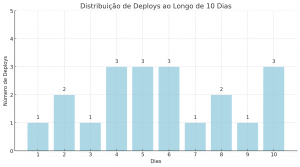

Deployment Frequency

This is the most straightforward metric: it measures how many times per day, week, or month your team deploys to production. And even though it looks simple, it reveals much more than it seems.

If the team deploys frequently, it means cycles are short, risk is lower, and feedback comes faster. If deploys take a long time to happen, there may be a bottleneck in PR reviews, fear of breaking something, or a lack of automation in the pipeline.

How to calculate:

- Define the period (e.g., 10 days)

- Count how many deploys happened

- Divide the number of deploys by the number of days

If there were 20 deploys in 10 days, you have an average of 2 deploys per day.

Benchmarks (State of DevOps Report):

- Low performance: less than once per month

- Medium: between 1x/month and 1x/week

- High: at least 1x per week or more

Lead Time for Changes

This is one of the most revealing metrics for any technical leadership team. Lead Time for Changes measures the time between the first commit and when that change reaches production.

If that time is high, something is blocking the flow. It could be slow reviews, an unstable staging environment, manual tests, or too much bureaucracy in the process.

How to measure:

- Record the timestamp of the first commit

- Write down the deploy date for that same change

- Subtract one date from the other

Example:

- Initial commit: January 1

- Deploy: January 5

- Lead Time = 4 days

Benchmarks:

- Low: more than one month

- Medium: between 1 week and 1 month

- High: less than 1 week

Want to go one step further? Break lead time into parts (e.g., time to open a PR, time to merge, time to deploy) to find where the real bottleneck is.

Change Failure Rate

This metric shows the proportion of deploys that result in rollback, hotfix, or production incidents. It’s essential to understand the stability of your deliveries.

If the team is shipping fast but breaking something all the time, the issue isn’t speed—it’s quality. This is the metric that forces you to look at tests, QA coverage, reviews, and requirements validation.

How to calculate:

- Count all deploys made in a period

- Count how many had issues

- Divide failed deploys by the total and multiply by 100

Example:

- 20 deploys, 3 with issues

- CFR = (3 / 20) × 100 = 15%

Benchmarks:

- Low performance: above 15%

- Medium: between 5% and 15%

- High: less than 5%

Mean Time to Recovery

Failures will happen. The question is: can your team react quickly?

MTTR shows how long it takes, on average, to restore service after a production issue. It’s a measure of your operational resilience.

How to calculate:

- List incidents in the period

- Record the start and end timestamp for each one

- Calculate the duration and take the average

Example:

- Incidents: 3

- Total duration: 135 minutes

- MTTR = 135 / 3 = 45 minutes

Benchmarks:

- Low performance: more than 1 day

- Medium: between 1h and 1 day

- High: less than 1h

A high MTTR usually points to a lack of observability, poorly defined response processes, or low team autonomy during incidents.

Why measuring these metrics well changes the game

Looking at these metrics superficially doesn’t solve anything. The value is in using them to answer real questions:

- Why are our deliveries slow?

- What’s blocking us more: review, tests, deploy, or incidents?

- Are we shipping faster or just generating more rework?

Building a pipeline for DORA metrics

After you have clear definitions, you need to automate data collection. Manual tracking isn’t reliable and doesn’t scale. The goal is to pull data directly from the tools your teams already use.

Integrating tools for data collection

You can get almost everything you need from your day-to-day tools

- Version control systems (Git): Your source of truth for commit data, which is the starting point for Change Lead Time.

- CI/CD platforms (GitHub Actions, Jenkins, CircleCI): These tools know when deploys happen and whether they succeeded or failed, providing Deployment Frequency and the end point of Change Lead Time.

- Incident management systems (PagerDuty, Opsgenie): Essential for tracking MTTR, since they record when an incident is opened, acknowledged, and resolved.

- Monitoring and observability platforms (Datadog, New Relic): Critical for detecting failures early and correlating them with specific deploys to calculate Change Failure Rate.

Ensuring data consistency

Automation is only useful if the data is accurate. The most important step is to standardize metric definitions across all engineering teams. Establish clear data capture points in your workflows, like using specific labels in pull requests or standardized event logs in the CI/CD pipeline. This removes ambiguity and ensures that, when you look at a dashboard, you’re comparing equivalent things across different teams and services.

Using DORA metrics to actually improve

Numbers alone don’t say much. The value shows up when you observe how they change over time and use these metrics to understand what’s happening in the system—not to evaluate people or compare teams.

Looking at trends and context

Instead of getting stuck on absolute values, it’s worth watching patterns. Has Change Lead Time been creeping up? Did Deployment Frequency drop after a major restructure? When you connect these shifts to changes in process, architecture, or tooling, it becomes clear where the issues are.

For example, if lead time increases at the same time the volume of AI-generated code grows, that usually indicates the review process didn’t keep up with that change and became a bottleneck.

It’s also important to remember these metrics reflect the system as it is today, including architecture and domain complexity. A team working on a legacy monolith will naturally have different numbers than a team building a new microservice. Comparing those two scenarios directly almost always leads to the wrong conclusions. The point of DORA isn’t to rank teams, but to help each one improve relative to its own starting point.

A simple way to use DORA continuously

Step 1: Understand the baseline and form hypotheses

Before automating anything, record where the metrics are today, even if they’re estimates. Then, form a clear hypothesis about a problem you believe exists.

For example:

“We believe the increase in AI-generated code is making reviews harder, doubling pull request review time and pushing Change Lead Time up.”

That gives you a specific question to investigate.

Step 2: Collect data automatically

With a hypothesis defined, it’s clear what data you need. Configure the tools the team already uses to collect that information automatically. Start with a simple dashboard, focused only on the essential metrics.

Before sharing those numbers, it’s worth spending some time validating whether they make sense and whether they match the agreed definitions. Bad data gets in the way more than it helps.

Step 3: Review regularly and act on what shows up

Set a fixed cadence, like a monthly team meeting, to look at DORA metrics. When something shows up outside the normal range, treat it as a signal to investigate.

If Change Failure Rate went up last month, look at the incidents and rollbacks from that period. What do they have in common? From there, pick a small, specific improvement to test in the process.

FAQ: DORA Metrics in practice

Do I need to measure all 4 metrics from the start?

No. Start with one or two that are easier to extract and that answer urgent questions for your team. Lead Time and Deployment Frequency are good entry points.

How often should I track these metrics?

It depends on your cycle. Reviewing every sprint or every two weeks is usually enough to spot trends without overloading the team.

Do these metrics work for small teams too?

Yes. Small teams have the advantage of being able to act quickly based on the data. The metrics just need to be adapted so you don’t overreact to very sharp swings.

How do we make sure these metrics aren’t used for micromanagement?

Be clear that the focus is improving the system, not measuring individuals. Share the data with the team, encourage conversations, not pressure. Culture comes before the dashboard.