Introduction

AI-powered IDEs blew up this past year—suddenly, every dev’s got a little robot sidekick.

But hey, coding is the fun part.

Code reviews? Not so much.

That’s where we started wondering: Can large Language Models (LLMs) handle your pull requests?

We put the usual suspects (GPT-4o and Claude Sonnet 3.7) up against our pipeline-based approach, Kody, and this benchmark is what we got.

The Reality of LLM Code Reviews

Let’s be honest: letting an AI do your code review sounds amazing—until you realize LLMs can be really noisy.

They spew a ton of extra info, some of which might be irrelevant or downright incorrect. Here’s the lowdown:

- Training on Imperfect Code: LLMs see a mix of great code and absolutely hideous code. Guess what? That means they can absorb some questionable patterns.

- Noise Over Signal: Even when they find a legit bug, they bury it under a laundry list of “meh” suggestions.

- Logic Over Syntax: Sure, they’re decent with syntax, but deeper logic? Often missed. And yes, the irrelevant commentary doesn’t help.

TL;DR: Relying 100% on raw LLMs can make your code reviews messy. Enter Kody’s agentic pipeline—basically, a fancy way of saying “we filter out the nonsense so you get real, actionable feedback.”

How We Set Up the Benchmark

Dataset

We grabbed 10 pull requests (PRs) from a repo—still a work in progress, by the way—and ran three distinct reviews on each.

- Kody

- Claude Sonnet 3.7

- OpenAI GPT-4o

We know that 10 PRs might seem like a small dataset. However, our goal was to run a controlled, in-depth comparison before scaling up. Each PR was carefully selected to cover a range of issues, from security flaws to code style inconsistencies. Future benchmarks will explore a larger dataset and broader language support.

Metrics

We tracked:

- True Positives (TP): Real problems caught.

- False Positives (FP): Alerts that weren’t actually issues.

- False Negatives (FN): Real bugs that slipped by.

From there, we calculated:

- Precision: How trustworthy are the alerts?

- Recall: How many actual issues got flagged?

- F1 Score: Balances out Precision and Recall (you get one number to rule them all).

We also checked standard deviation and coefficient of variation (CV) to see if each model was consistent or just rolling dice on your PRs.

Ensuring Fair Testing of LLMs

To keep the benchmark fair and repeatable, we ran GPT-4o and Claude Sonnet 3.7 using the same prompt for code reviews. While the prompt itself isn’t publicly available, we ensured that it focused on code quality, security, and best practices, without favoring any specific approach.

This means all models were given the same level of context and instructions. No extra tuning, no cherry-picking.

What’s the Coefficient of Variation (CV)?

Basically, CV tells us if your results are all over the place or nicely grouped. It’s:

CV = (Standard Deviation / Mean) × 100%

- Dimensionless: Lets us compare different scales without confusion.

- Higher CV: Means the model’s performance is about as stable as a shaky Jenga tower.

- Normalization: Puts variability in context with your average results.

Our Results (CV)

- Kody (0.402): Solid, consistent.

- Claude (0.774): Kind of all over the place.

- GPT (1.651): Even more up-and-down than your local cryptocurrency.

Rule of Thumb:

- CV < 0.15 → super steady

- 0.15–0.30 → moderate fluctuations

- 0.30 → brace yourself, it’s bumpy

Adding a Consistency Twist

Because we like being fair (but also practical), we introduced a consistency penalty (weight = 0.2) to whack down the scores of models that like to wander:

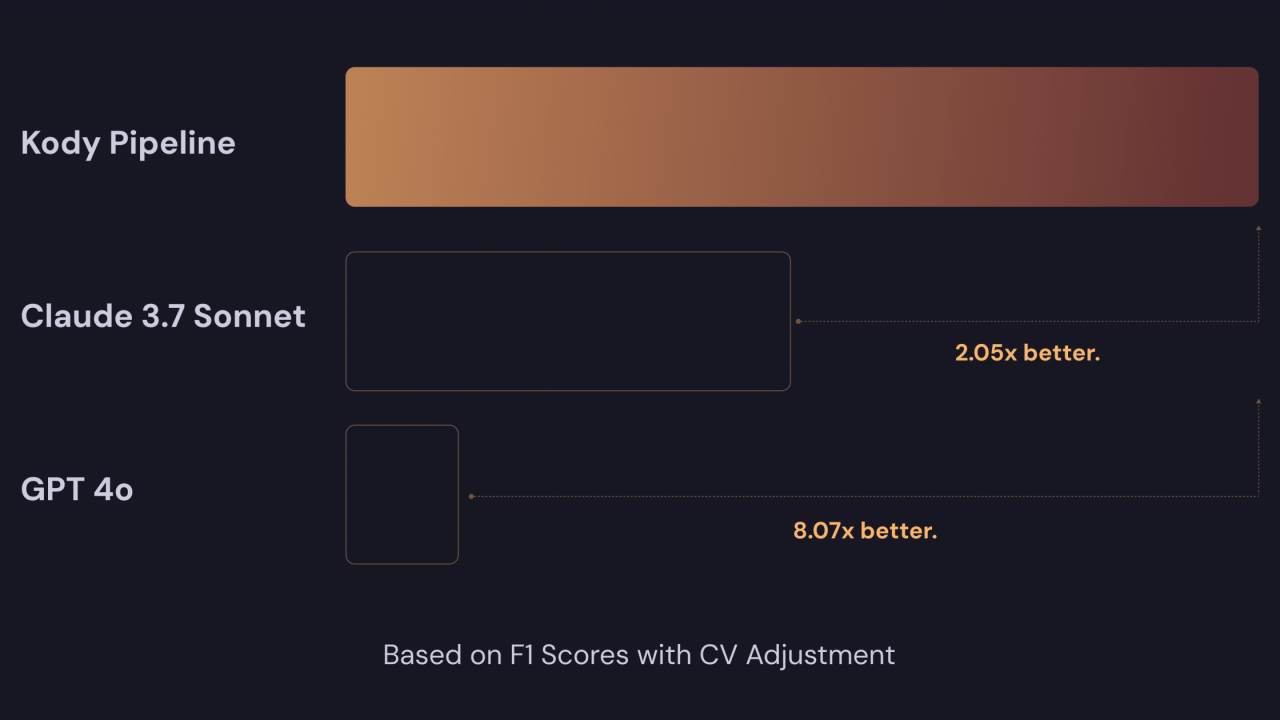

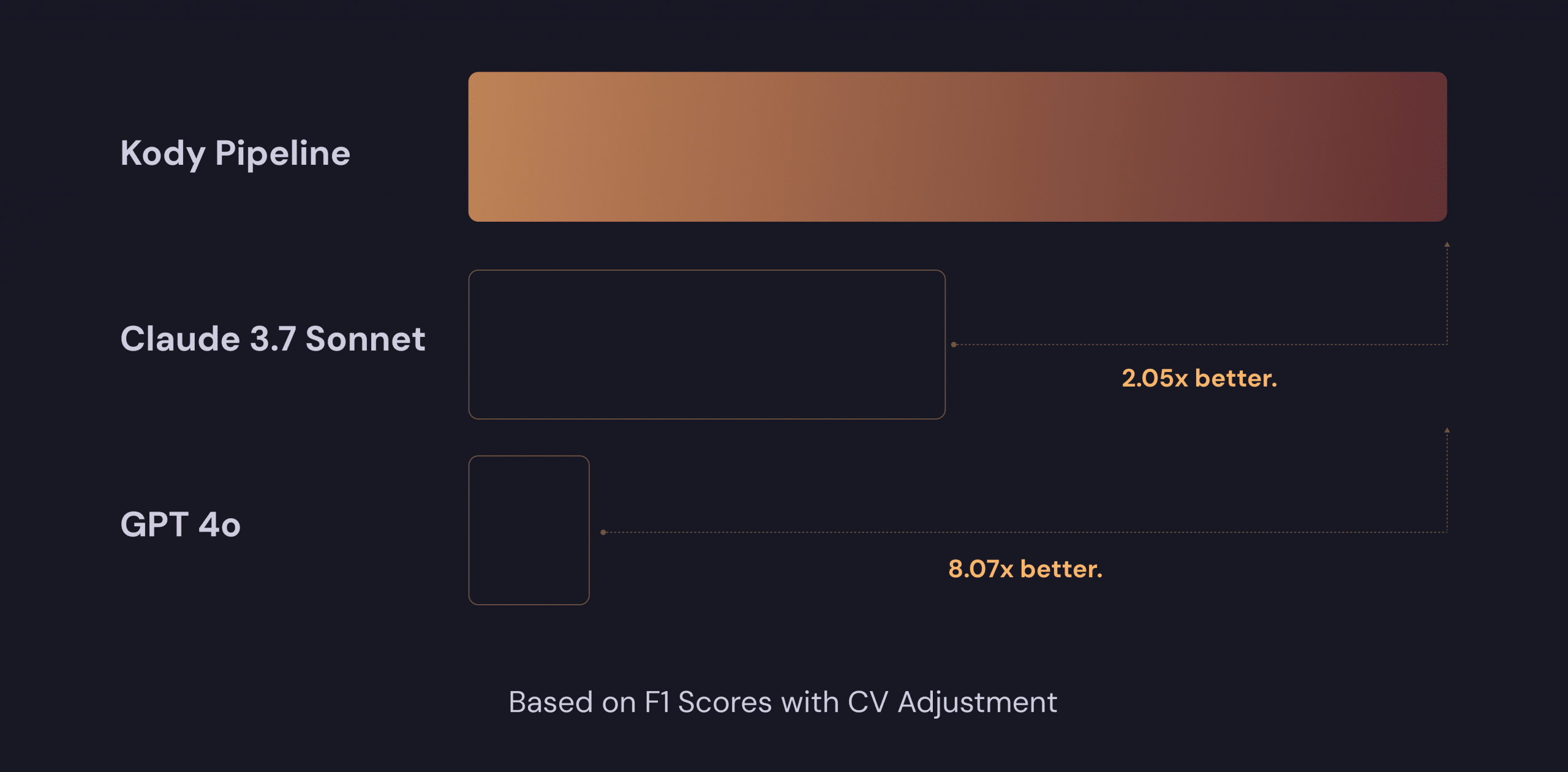

- Kody: Adjusted score ~0.581 (about 8% drop—no biggie)

- Claude: Adjusted ~0.283 (15.5% drop)

- GPT: Adjusted ~0.072 (33.0% drop—ouch)

If you want to be nicer, lower the weight (like 0.1). If you want to be savage, crank it to 0.5. We picked 0.2 because it punishes big fluctuations but doesn’t overshadow an otherwise good performance.

Results

Let’s see the final scoreboard:

-

F1 Original

- Kody: ~0.65

- Claude: ~0.48

- GPT: ~0.18

- Kody’s about 1.35× higher than Claude and 3.6× higher than GPT.

-

F1 Adjusted (DP)

- Kody: ~0.60

- Claude: ~0.40

- GPT: ~0.15

- Kody’s ~1.5× better than Claude and 4× better than GPT.

-

F1 Adjusted (CV)

- Kody: ~0.58

- Claude: ~0.28

- GPT: ~0.07

- Kody’s 2.1× better than Claude and 8.3× better than GPT. That’s basically light years in code-review land.

Big picture: Kody is the most consistent and top performer. Claude hits the middle ground. GPT-4o—well, it struggles with consistency and ends up near the bottom.

Clarifying the F1 Score Adjustment

We introduced an adjusted F1 Score to penalize models that were highly inconsistent. The idea is simple: if a model flags a serious issue in one PR but completely misses a similar issue in another, that’s a problem.

Here’s how it worked:

- We applied a consistency penalty (weight = 0.2) to models that showed high variability.

- Models that provided more stable and predictable feedback retained most of their original score.

- Models that had wild fluctuations took a bigger hit.

This isn’t just a theoretical tweak—it reflects the real-world frustration of engineers dealing with AI tools that contradict themselves.

Ongoing Study and Future Plans

This is just our first pass. We’ll keep digging into more PRs, more languages and more AI Models. The point is, we’re not stopping here. As we expand, expect more insights and deeper comparisons, so we can all keep leveling up how we do code reviews.

—

Bottom Line? LLMs alone can be a bit wild for reviewing production code. Mix in an agentic pipeline—like Kody—and you end up with actual, useful feedback that doesn’t bury you under false positives or random suggestions.