The term code quality gets used all the time, but what does it really mean? It’s a measure of how well a codebase allows your team to build, maintain, and evolve features over time. You can break it down into a few key dimensions: maintainability, readability, reliability, security, and testability. High quality means an engineer can find and fix a bug easily, a new team member understands the intent of the code without needing a six-hour explanation, and the system behaves as expected in production.

The real impact shows up in the team’s speed and stability. A high-quality codebase lets you ship features faster, reduces the number of production incidents, and lowers the total cost of maintenance in the long run. It’s the difference between a system the team can evolve with confidence and one everyone is afraid to touch.

What Code Quality Really Means

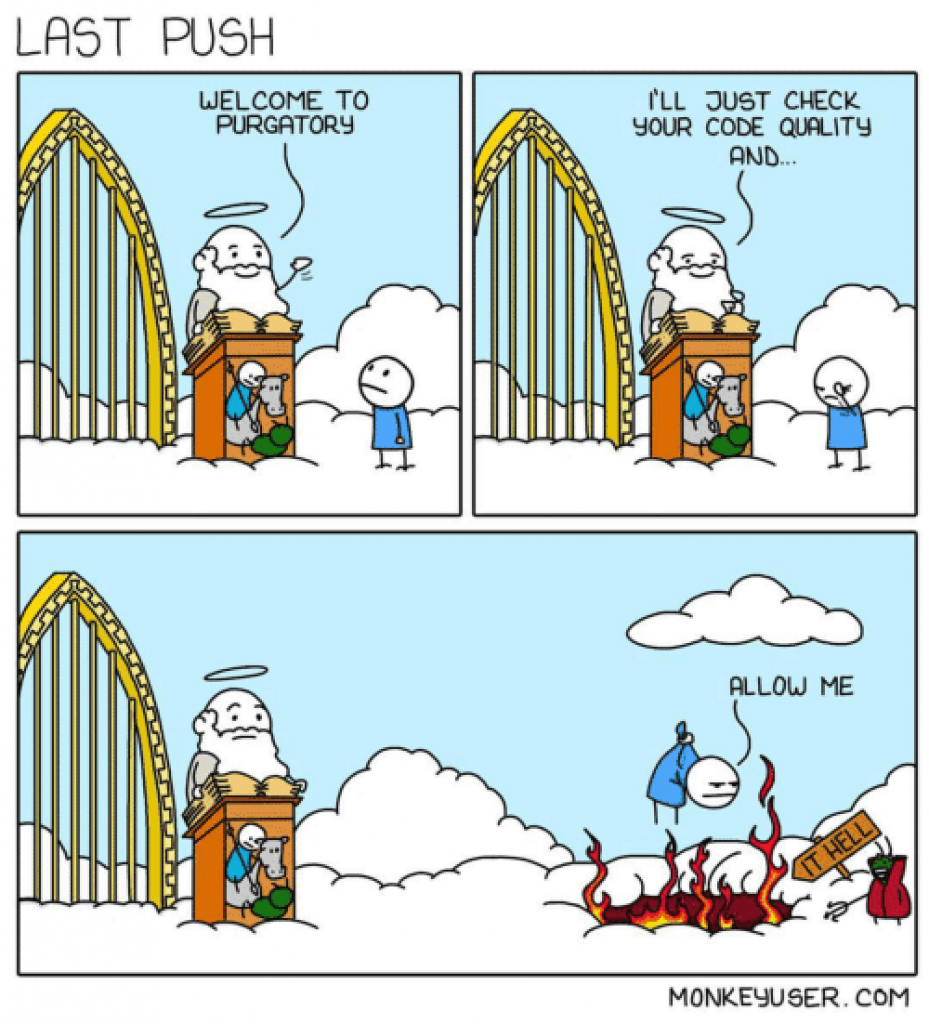

Everyone has seen a project where CI passes and everything is still slow. Things that should take days end up taking weeks. A small bug fixed in one place creates two new ones somewhere else. That’s the buildup of latent debt, and that’s where the real cost of neglecting quality shows up. The code gets harder and harder to understand, bugs keep popping up, and the team starts running out of patience.

This happens because automated checks only take you so far. Static analysis is great at catching syntax errors and style violations, but it can’t tell you whether a system’s design is fragile or whether your core abstractions are wrong. Keeping consistent standards across multiple teams is hard, and in the end a lot of people just do the minimum to get CI to pass, instead of actually understanding the principles behind them. The gap between principle and practice grows under pressure, when good intentions get traded for hitting a deadline.

Defining Code Quality in Practice

Real quality has less to do with perfect syntax and more to do with communication. The code you write today is a message to the next engineer who will need to change it, which might be you six months from now. It’s about making design choices that don’t just solve today’s problem, but also don’t create a bigger problem for tomorrow. That means getting out of a cycle of fixing bugs and paying down technical debt and moving to a more proactive approach, focused on careful and intentional work in every pull request.

The Cost of Neglecting Code Quality

Everyone has worked in a codebase where quality took a back seat. The problem rarely shows up as one big failure all at once. It shows up gradually: changes take longer, reviews get harder, and the team’s ability to ship goes down release after release.

The Gradual Loss of Team Velocity

The first sign is that everything starts taking longer than it should. A simple bug fix requires changes across many files because responsibilities aren’t well defined and the logic has spread throughout the system.

Onboarding a new engineer starts taking weeks, since a big part of how things work depends on historical context and decisions that were never captured in the code.

Building a new feature turns into risky work, where any change can affect unrelated parts.

This scenario builds up over time, from lots of shortcuts and one-off fixes that seemed acceptable in the moment, but ended up increasing coupling between parts of the system. AI tools can speed this up by generating working code without enough context, replicating problematic patterns at scale when there’s no clear direction.

Immediate Speed vs. Maintainability

The decision to take shortcuts is almost always framed as a trade-off between speed and quality. Shipping a feature this week seems more valuable than refactoring a module for some hypothetical developer in the future. That math is flawed because it ignores the compounding cost of complexity. The time you save today gets paid back with interest every time someone has to work in that part of the code again.

When a system is hard to understand, each next feature is slower to build, harder to test, and riskier to ship to production. The initial speed spike gives way to a long, frustrating plateau where the team spends more time fighting the codebase than building with it.

How to Measure Code Quality Without Annoying Your Team

To improve something, you need to measure it. But metrics can easily be misused, turning into a management control tool instead of a resource for developers. The best approach combines quantitative metrics from automated tools with the qualitative judgment of experienced engineers in code reviews. Numbers provide objective signals, while people bring the context.

Here are some of the most practical metrics to track:

Cyclomatic Complexity

Measures the number of independent paths inside a function. The lower, the better, because it means the code is easier to understand, test, and maintain. A value under 5 is great, 5 to 10 is acceptable, but anything above 15 is a strong signal the function should be refactored.

Maintainability Index

It’s a calculated score, usually from 0 to 100, that combines metrics like cyclomatic complexity and lines of code into a single number representing how easy the code is to support. A value above 20 is generally considered healthy, while anything below 10 indicates a serious problem.

Test Coverage

Shows what percentage of your code is executed by automated tests. While 100% coverage doesn’t guarantee the code is bug-free, low coverage (below 70%) is a clear risk. It shows which parts of the system don’t have a safety net against regressions.

Code Duplication

Tracks the percentage of code that was copied and pasted. A duplication rate above 5% usually indicates you’re missing opportunities to build shared abstractions, which creates more work when you need to fix a bug across every duplicated spot.

Code Smells

They aren’t bugs, but indicators of deeper issues in the code’s design. Tools can automatically detect smells like “Long Method,” “Large Class,” or “Feature Envy.” Think of them as clues for where to focus refactoring efforts.

Bugs and Vulnerabilities

These are direct measures of quality failures. The goal for any new code going into the main branch should be zero new critical or high-severity vulnerabilities. Tracking bug density (bugs per thousand lines of code) over time can also reveal trends in the health of the codebase.

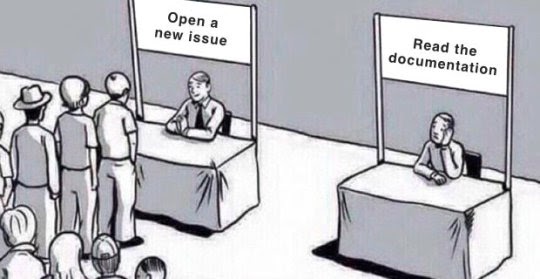

🚨 One tip to be cautious: metrics are signals, not targets. If you tell a team the goal is 100% test coverage, you’ll end up with a bunch of useless tests that only validate true === true. The point of these numbers is to start a conversation during code review, not end it with a pass/fail judgment.

Best Practices to Maintain Code Quality Over the Long Term

Building and maintaining quality takes a deliberate system, not just good intentions. This needs to be integrated into the team’s daily habits and supported by the development workflow, especially when dealing with a higher volume of AI-generated code.

Core Principles of Code Quality

Instead of a 50-page document with coding standards, focus on a few principles that guide day-to-day decisions. They should be simple, easy to remember, and directly tied to the challenges your team faces.

Writing Code with the Person Who’ll Read It Later in Mind

All code should be written assuming someone else will need to understand it a year from now, without you around to explain it. That means prioritizing clear variable names, small functions with a single responsibility, and comments that explain the “why” behind a complex decision, not the “what” of the code. In a code review, the central question should always be the same: is the intent of this change clear?

Testability and Maintainability

Code that’s hard to test is usually code that’s poorly designed. By making testability a requirement for the team, you encourage better design practices, like dependency injection and clear separation of responsibilities. Automated tests are the foundation that lets you refactor safely and change code without being afraid of breaking what already works. For example, you can add the following types of tests:

Unit Tests are the foundation. They validate the smallest possible unit of code, usually a single function, in isolation. They should be fast and specific. Got a complex sorting algorithm or a validation function packed with rules? Cover it with unit tests.

Integration Tests verify that the pieces work together. It’s not enough for each function to run well on its own; they need to talk to each other correctly. This is where you ensure, for example, that an API call actually updates the database as expected.

End-to-End (E2E) Tests simulate the user experience. They’re the “final boss”: they automate real flows inside the application, like “log in, add an item to the cart, and check out.” They’re slower and more brittle, but essential for finding bugs that only show up when the full system is running.

Observability and debugging ease in production systems

Quality doesn’t end at the pull request. The code needs to make sense when it’s running in production. That means structured logs, metrics, and tracing from the start. When something goes wrong, the data to investigate the problem needs to be available. If you can’t understand the code’s behavior under real load, there’s a quality problem there.

A 30-Day Plan to Improve Code Quality

Week 1: Establish a Baseline and Align on Principles

First, figure out where you are. Run a static analysis tool (like SonarQube, CodeClimate, or a similar service) on your main branch to get an initial report of the key metrics. The goal isn’t to fix everything at once, but to understand your starting point. Then, hold a meeting with the team to discuss the results and align on a shared definition of what “good enough” means for the project. Use that conversation to create a “Definition of Done” checklist for pull requests.

Week 2: Introduce a Definition of Done for Pull Requests

Add the checklist you created to the pull request template. This makes quality standards explicit and gives reviewers a consistent baseline. This week, enforcement is manual. The focus is to build shared habits and promote conversations about the code during the review process. Your checklist should be a living document, but a good starting point includes:

- The code is understandable (clear variable names, simple logic).

- It follows the codebase’s existing standards and conventions.

- It has enough automated tests (unit, integration).

- It doesn’t introduce new lint errors or relevant code smells.

- It includes documentation for new public APIs or complex logic.

- The change is small and focused on a single responsibility.

Week 3: Automate with Quality Gates in CI

Now it’s time to let machines do the boring work. Configure your CI pipeline to run quality analysis on every pull request. The key is to differentiate between gates that block the merge and gates that only post a warning. This keeps the pipeline from becoming a source of frustration, while still providing valuable automated feedback.

Blockers (Hard Gates): Should fail the build and prevent the merge.

- New critical or high-severity vulnerabilities are introduced.

- Unit tests are failing.

- Test coverage drops below an agreed threshold (for example, 70%).

Warnings (Soft Gates): Should comment on the PR, but allow the merge if the team agrees.

- A new relevant code smell is detected.

- Cyclomatic complexity in a modified method exceeds the team’s threshold (for example, 15).

- Light code duplication is found.

Week 4: Review, Adjust, and Plan Next Steps

At the end of the month, look at the metrics again. Did they improve? Run a retrospective with the team to discuss the new flow. Are the CI gates too strict or too loose? Is the checklist helping or just creating noise? Use the feedback to adjust the process.

Finally, make this proactive work part of the routine by setting aside dedicated time to tackle the issues flagged by the tools. That can be a recurring “technical health” sprint or a few hours every Friday.